An better Approach to an unsolved Problem

TLDR: Authoring fast numerical array codes on .NET is a complex task. ILNumerics Computing Engine simplifies this task: it brings a convenient syntax, which is fully compatible to numpy and Matlab. And today, we bring back the free lunch: our brand new JIT compiler not only transforms numerical algorithms into highly efficient codes, it also automatically adopts to and parallelizes your workload on any hardware found at runtime. It segments and distributes even small workloads efficiently – more fine grained than any manual configuration could do.

Author: H. Kutschbach (ILNumerics) Reading time: 12 min

Since its introduction in 2007 ILNumerics has led innovation for technical computing in the .NET world. We have enabled a short, expressive syntax for authors of numerical algorithms. Writing array based algorithms with ILNumerics feels very similar (and is compatible) to well known prototyping systems, namely: Matlab, numpy and all its successors.

Another focus of ILNumerics has always been: performance. In fact, we started the company in 2013 with the goal to build the fastest technology on earth. Today we propose a better, faster approach to array code execution: ILNumerics Accelerator.

This is part 2 of an article series on ILNumerics Accelerator. In the first part we’ve explained why automatic parallelization was too large a problem for the existing compiler landscape. In the following we start by describing the problem ILNumerics Accelerator solves. Then we’ll explain how it works.

The Problem Space

Math drives the world. High tech companies, financial institutions, medical instruments, rocket science … The most innovative projects do all have one thing in common: they are driven by numerical data and complex mathematical algorithms working on these data. Most data can be represented as n-dimensional arrays. They allow to implement great complexity without great effort. Consequently, array based algorithms play a huge role in todays most innovative industries.

Over the years we have seen many different attempts to realize the advantage of numerical arrays for small and large developer teams, working on .NET. Some large teams have even built their own solution. And indeed: building a math library in C# is easy to start with! You’ll see many low hanging fruits. With a few lines of code, you are able to create matrices and to sum them in short expressions, like ‘A + B’! Woooh!! If this is all you need then you can safely skip the rest of this article…

Often enough managers loose enthusiasm when after some months huge device codes built with such trivial ‘solution’ eventually show execution speed far below their requirements.

Optimal execution speed is only realized by efficient utilization of all compute resources.

What may sound simple is actually a problem that has been waiting for a solution for more than two decades. Todays hardware is mostly heterogeneous. Even inside a single CPU there are at least two levels of parallelism. Often, there is also at least one GPU around.

The only working way to utilize heterogeneous computing resources today was to manually distribute manually selected parts of an algorithm and its data to manually selected devices. Many tools exist which aim to help the programming expert to decide, write and fine-tune the required codes at development time. This still requires expert knowledge and intimate insights into the data flow of your algorithms. And it requires time. A lot of time! The large codes created this way need testing and maintenance.

Nevertheless, this demanding approach still cannot harness all performance potential! Needless to say that such results are far from being expressive and easy to understand. ILNumerics Accelerator is here to solve this problem for the large domain of numerical array codes.

The Big Performance Picture

Let’s widen our perspective on the performance topic! Three major factors influence execution speed:

1. The Algorithm

Basically, a program performs a transformation of data from one representation (input) into another representation (result). The transformation is described by the program´s algorithm(s). This high-level, business view on software is ‘lowered’ by the programmer when she writes the concrete program code, enabling a compiler to understand the intent. In subsequent compilation steps the code is then further lowered by one or more compilers into other representations, which can be better understood by the hardware. So, the programmer and the compiler(s) share responsibility to implement the intended transformation in a way that it is carried out on the available hardware and produces the correct result in the shortest time possible.

2. The Hardware

An optimally fast program must recognize and utilize all available hardware resources. The exact configuration and properties of all devices, however, is typically only known at runtime. This implies that a significant amount of transformation can only be done at runtime (-> JIT compiler). One may say, a program could be specialized for one specific set of computing resources. This is of course true, but it only shifts issues to the next time the hardware is to be renewed. Further, some properties of the hardware are inherently only known at runtime. Two examples: resource utilization rate and influences by other programs / threads running concurrently on the computer. If the final executable program fails to keep all parts of the hardware busy with useful instructions the program will require more time to run than necessary.

3. The Data

In general technical data is made out of individual numbers, or ‘scalars’. Traditionally, processor instructions deal with scalar data only. In array algorithms data forms arrays with arbitrary shape, size and dimensionality. This can be seen as an extension to the traditional scalar data model, since n-dimensional arrays include scalar data as a special case.

The array extension introduces great new complexity. Instead of a single number, having a type and a value, an array instruction deals with input data which may have one or many, often several million individual values! But arrays can also be empty…

While there is often exactly one way to execute a scalar instruction, to execute an array instruction efficiently requires careful plan and order and to select and align the execution strategy to the (greatly varying) data and hardware properties. Again, failing to do so (at runtime) will lead to sub-optimal execution times.

Workload & Segmentation

Together with the algorithm data forms another important factor: ‘workload’. It is a measure of the number of computational steps required to perform a certain data transform. Let’s say: the number of clock cycles of a CPU core required to compute the result of the ‘sin(A)’ instruction for a matrix A of a certain size. Mapping the workload onto a concrete hardware device yields the effort or cost (in terms of minimal time or energy) required to complete the transform on this device.

Obviously, throughout a program´s execution there are many intermediate steps, transforming various data with varying properties (-sizes, etc.). The overall workload – in a slightly simplified view – is the aggregation of all workloads of all intermediate steps.

To keep a program´s execution time small, all intermediate steps must be carefully adopted to and execute on the fastest hardware resource(s) currently available, yielding the lowest cost. Compare this to how a programmer today manually selects a certain device for (manually selected) large, predefined parts of a program at development time!

Manual segmentation and device resource selection cannot bring optimal performance for all parts of a program.

Array Codes – done (& run) right

The considerations above should make it obvious that no static compiler is able to create optimal execution performance (letting toy examples aside). Data and hardware properties are subject to change! So does the optimal strategy for lowering the individual parts of a user algorithm to hardware instructions! Lowest execution times will only be achieved, if all parallel potential of an algorithm is identified and used to execute its instructions concurrently, on all available hardware resources, and efficiently.

But let’s skip all reasoning and jump right into the interesting part! Here is how ILNumerics Accelerator achieves exactly this goal:

- Array instructions within the user program are identified and merged into ‘segments‘.

- Segments limits are optimized to hold chunks of suitable workload whose size can be quickly determined at runtime.

- Before executing a segment …

- The cost of the segment with the current data is computed for each hardware resource.

- The best (i.e.: the fastest) hardware resource is selected.

- The segments array instructions are optimized for the selected resource and current data.

- The kernel is scheduled on the selected resource for asynchronous execution and cached for later reuse.

Likely, this list requires some explanation.

[ Video does not show? Download here: https://ilnumerics.net/media/andere/ILNumerics_Segments_2022.mp4 ]

ILNumerics Accelerator takes a new approach to efficient array code execution. Instead of attempting global analysis (the top-down approach which failed in so many projects) we optimize the program from the bottom-up. Our compiler recognizes all fundamental array instructions and expressions thereof, merges them into ‘islands’ (segments) of well-known semantics and execution cost. Each segment contains a small JIT compiler, specialized to quickly compile the segments instructions at runtime into highly optimized low-level codes – for the .NET CLR and for any OpenCL device! This step recognizes and adopts to all hardware and data properties found at runtime. And it maintains compatibility with all .NET platforms.

Currently, we support unary, binary and reduction array instructions provided by ILNumerics Computing Engine and all expressions made thereof. No changes or annotations are required to existing codes. The ILNumerics language supports all features of the Matlab and numpy languages. It will be possible to build connectors to other languages and to custom array libraries exposing similar semantics.

During build each array expression is automatically replaced from the user code files with a call to a specialized, auto-generated segment. Next to hosting a JIT for the array instructions a segment also ‘knows’ the workload of its inherent computations. When at runtime the segment is called, it uses this info to compute the cost for executing the segment on each device and to identify the device suggesting earliest completion. Afterwards, it enqueues the optimized kernel to this device for computation.

It then – without waiting for a result – immediately passes on control to the next segment in the algorithm. Now, if subsequent segments do not depend on each other they are scheduled onto different threads or devices and execute in parallel!

All technical details of the new method are found in the patents and patent applications listed at the end of this article.

Advantages

ILNumerics Accelerator automatically executes your algorithm in the fastest possible way:

- During execution it finds chunks of workload which can be computed concurrently.

- It identifies the best suited hardware device available at this time.

- For each segment it adopts the execution strategy to the fastest device, and

- Computes the workload of many segments in parallel.

Therefore, it scales execution times with the hardware without recompilation or manual code adjustments.

Our compiler …

- Supports vector registers, multiple CPU cores, and all OpenCL devices.

- Removes temporary arrays and manages memory between devices transparently.

- Applies many new and important optimizations, on kernel and on segment level.

- Fully managed solution: it targets the .NET CLR, thus is compatible with all .NET platforms.

… which brings many benefits:

- Efficient use of all compute resources.

- No expert effort required.

- Shorter time to market.

- Adjusts to new hardware.

- Maintains maintainability.

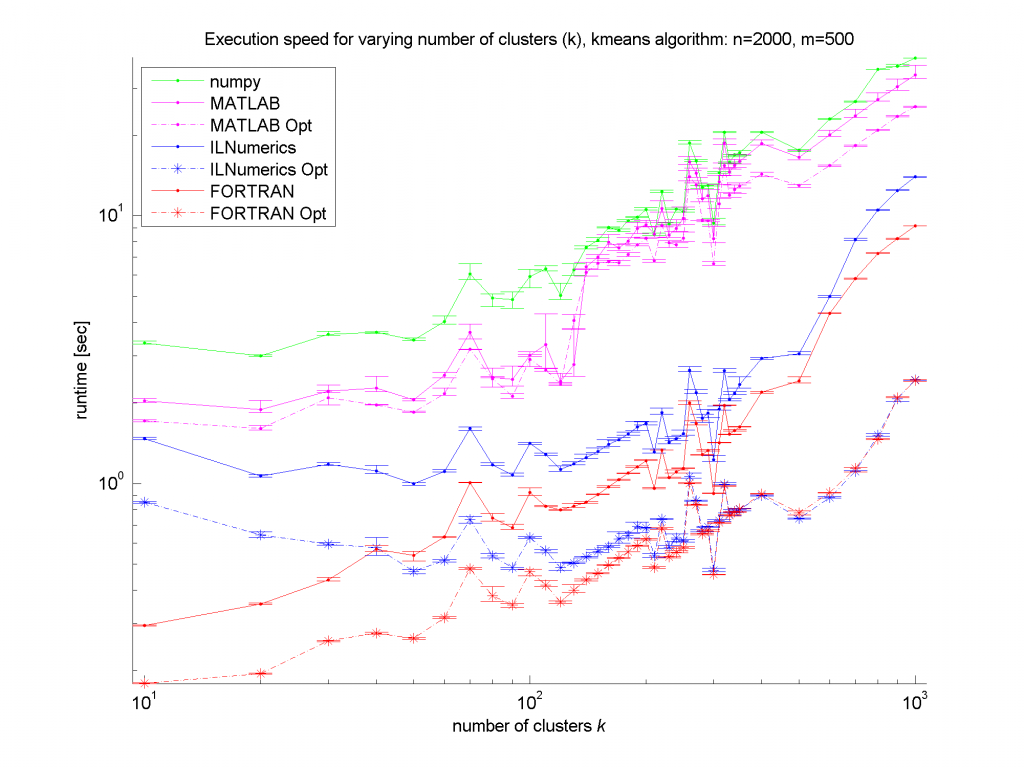

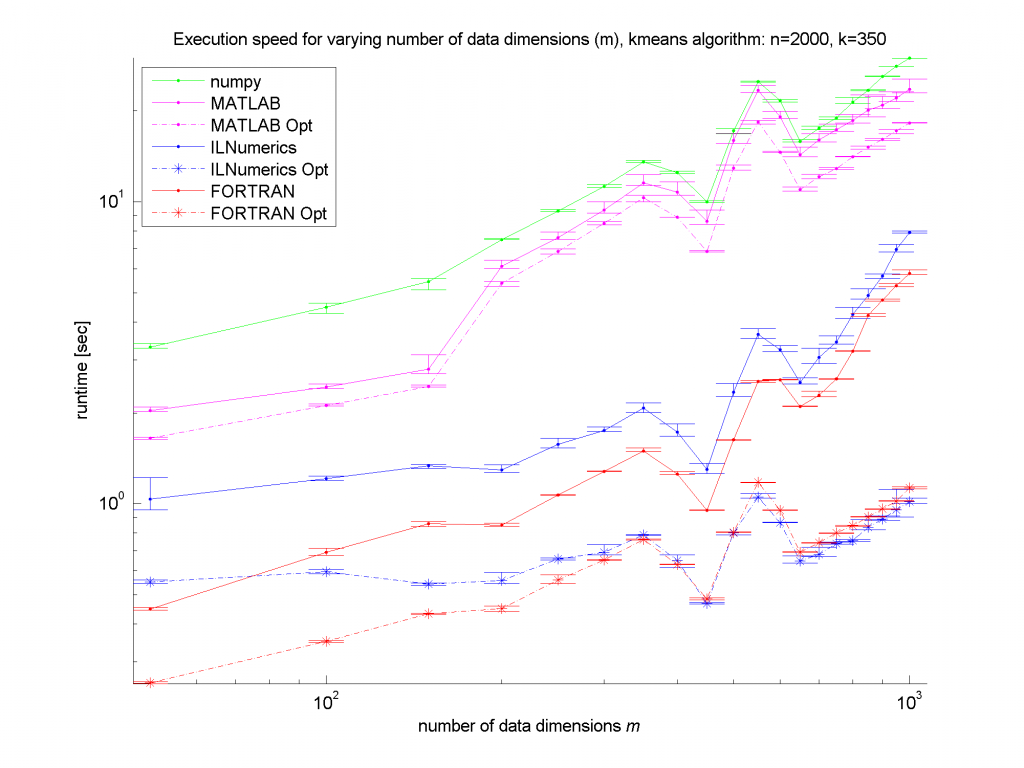

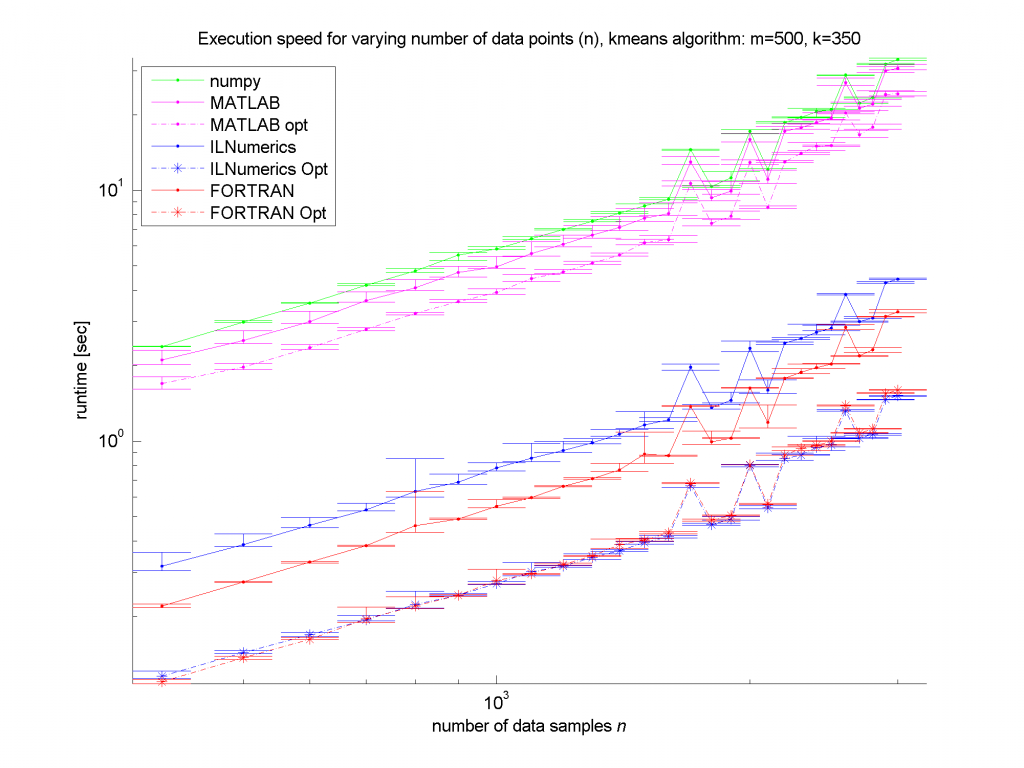

“How much faster is it”?

This is a very popular question! A comprehensive answer deserves its own article, really.

In short: it depends on your hardware. It depends on your algorithm. And it depends on your data! The question should rather be: how much more efficiently will it utilize my hardware? For the majority of real-world algorithms is true: as efficient as possible! Moreover, it does so automatically!

(If you are still longing for a number: on 2015-ish hardware we see speed increase rates between 3 and more than 300. Most of the time optimized code runs faster by at least a magnitude.)

Where can I get it ?

ILNumerics Accelerator has entered the public beta phase. It will be released with ILNumerics Ultimate VS version 7. The pre-release is available on nuget. Start here ! General documentation on the new Accelerator is found here. It will subsequently be completed within the next weeks.

Patents

ILNumerics software is protected by international patents and patent applications. WO2018197695A1, US11144348B2, JP2020518881A, EP3443458A1, DE102017109239A1, CN110383247A, DE102011119404.9, EP22156804. ILNumerics is a registered trademark of ILNumerics GmbH, Berlin, Germany.