ILNumerics releases Version 7.0

The ILNumerics team is proud and excited to announce the availability of a new major release of its ILNumerics Ultimate VS product line. This milestone represents a significant advancement in compiler technology: it introduces the first autonomously parallelizing array compiler, ILNumerics Accelerator.

Array codes, such as numpy and Matlab, are popular within the scientific community. They simplify the description of complex numerical algorithms. However, when these numerical codes are implemented into industrial-grade products, distributed to customers, and maintained by a larger development team for many years, existing tools reveal considerable limitations, with execution speed being possibly the greatest limitation.

ILNumerics Accelerator is here to resolve this issue once and for all. Built on the convenient language of ILNumerics Computing Engine for authoring scientific array codes directly in C# /.NET, our intelligent Accelerator compiler reformulates array codes and executes them in the most efficient manner, unguided and autonomously.

Short Dive: Building an Array Compiler …

The key to optimal hardware utilization today is, of course, the ability to efficiently parallelize the workload. Therefore, ILNumerics Accelerator compiler automatically detects and utilizes parallel potential within subsequent array instructions of your algorithm.

The prevalent approach to automatic parallelization today involves analyzing your code to detect instructions that can execute in parallel. The code is then rewritten to allow these independent instructions to run concurrently. This approach originated at a time when C/C++ and FORTRAN were considered ‘high-level’ languages. While these languages offer some basic array support, numerical algorithms written in them inherently deal with scalar data.

In contrast, the algorithms we handle use n-dimensional arrays as the fundamental data type. When striving for real efficiency, the arrays’ complexity prohibits any compile-time decision about data independence, a suitable execution device, or a low-level implementation using vector instructions, among other factors. Too many variables determine the optimal execution strategy, and their variation is too extensive to identify at development time.

… with a fresh Approach to Parallelization …

ILNumerics takes a data-driven approach to parallelization, making all crucial decisions ‘just in time’ at runtime. This method enables us to implement the low-level internals of array instructions efficiently and eliminates the need for expensive dependency analysis of large program segments. It’s easier to determine at runtime whether an instruction relies on other data and when that data will be available.

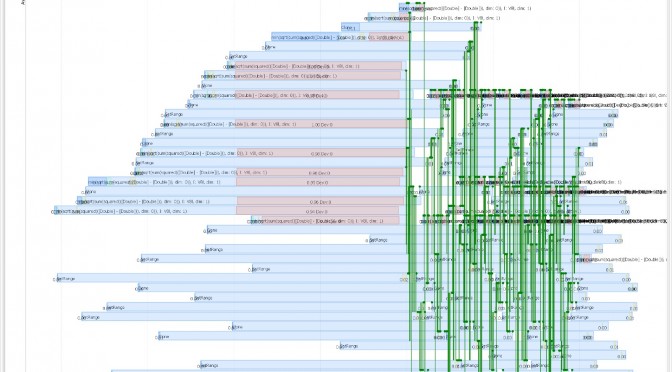

However, this increases the compiler’s complexity significantly. Instead of producing fixed code for an array instruction found in the original program, we now generate an intermediate program, often representing several subsequent array instructions. This intermediate program is then transformed at runtime to reflect the original instructions in a manner that respects all influencing factors and enables optimal efficiency.

… with Intelligence and Freedom

These programs all run asynchronously, scheduling themselves onto suitable devices for execution at runtime. They connect with previous and subsequent asynchronous neighbors on the fly, even as they progress in calculating results. They autonomously determine the optimal implementation on the device they select. Significant decision-making power comes with immense responsibility. The final program code builds up asynchronously and autonomously at runtime.

Executing the same code branch twice may result in very different machine instructions. As the creators of the compiler, we can’t predict many details of the final implementation. We can’t even forecast the actual number of asynchronous programs, which we refer to as segments, executing concurrently. The decision now rests with the computer. It has a better understanding of the situation than we do and can process information and make educated decisions much faster and more effectively.

Why another Beta, then?

The first autonomous array compiler has arrived. Are you eager to try it out? Great! Go ahead and test the current beta from nuget.org. Its performance is genuinely impressive.

The majority of our users are building industrial products and have chosen ILNumerics for its reliability and seamless integration into .NET (which means all of .NET, since 2005). The compiler will become a regular part of the ILNumerics Computing Engine once it’s ready. Currently, it’s like a young child, learning new things each day. It’s learning to walk and talk, occasionally stumbling over previously unknown obstacles. Sometimes, it even surprises its creators with unexpected efficiency.

With recent advancements, the Accelerator compiler is now set to ‘always on’ mode. This marks a significant milestone in the development of a compiler. It’s now trusted to be stable and flexible enough to handle all codes, even unseen ones, ensuring it produces correct results and serves its primary purpose: to enhance efficiency.

The compiler will continue to develop until the final release. It will become more stable and gain more real-world exposure. We would greatly appreciate if your feedback contributes to its maturity by then.

All previous modules of ILNumerics have been adapted for the new Accelerator. They are thoroughly tested, have undergone many bugfixes and improvements, and are available for production on nuget.org as of today.

Introducing the ILNumerics Accelerator:

[ Video does not show? Download here: https://ilnumerics.net/media/andere/ILNumerics_Segments_2022.mp4 ]