A Truth most Programmers won’t tell.

TLDR: It was twenty years ago when computer manufacturers, while trying to boost CPU performance, were confronted with the hard wall imposed by physical limitations. It took a long time for everyone involved to realize that individual processors could no longer become significantly faster in the future. The previous approach made it too simple to deal with the constantly growing demands caused by ever larger data and ever more complex programs. Haymo Kutschbach, founder and CEO of ILNumerics explains the challenges involved for automatic parallelization on today’s hardware and why traditional compilers will not satisfy the strong demand for a feasible solution. A better, working approach is presented.

Reading time: 10 minutes

For many decades, the rule applied: “Your program is too slow? Simply buy the latest hardware!” This was made possible by the availability of (general purpose) programming languages, as well as a stream of innovations in processor architectures and the associated compilers. They allowed the programs to be written largely independently of the execution hardware. The compiler adapted abstract codes to the low-level features of the processors. Everything fit together so conveniently!

And then nature threw a spanner in the works on this easy calculation. We’ve seen new computers continueing to obey Moore’s Law and become more powerful with each generation. But this new computing power is now distributed over many processors! A program can only use the new speed if it can use all processors at the same time. In addition, a significant part of the computing power of today’s computers is found in heterogeneous architectures such as GPUs and other accelerator hardware.

The consequences can be observed today in probably every development department: Programs are written abstractly. Efforts are made to create ‘clean code’ that can be tested and maintained. Unfortunately, it is often executed too slowly, though! So you start to examine the codes for optimization potential. You identify individual bottlenecks, decide manually on a parallelization strategy, implement, test and measure again. Many programmers don’t see this as a problem – their expert knowledge guarantees them a good income for many years to come! Since there are no alternatives, the enormous delays in time to market are accepted. Just like the fact that the next generation of devices will again require a complete rewrite for large parts of the software.

Hardware today is far more diverse and heterogeneous than it was 20 years ago. But why is that actually a problem? Why are we still not able to automatically adapt and run our programs on such hardware again?

If it’s too slow, blame the Compiler!

For one, it’s because the compiler market has traditionally been dominated by processor manufacturers. Their compilers served to make their own hardware more accessible. Even though we’ve seen a recent trend towards vendor-independent compilers, they still continue the traditional approach: compiling (“lowering”) code of a general purpose programming language (like C or Fortran) to hardware instructions that can be executed on a specific (and previously selected) processor architecture. What we actually need, however, is a compiler that translates an abstract program for a “computer” – with all its diverse computing resources.

This task, however, is much more demanding! Why? Here we come to the second reason that has so far prevented a working solution: granularity. In order to be able to use parallel resources, the software must contain additional instructions that take care of the distribution of the individual program parts. This additional overhead makes a certain minimum program size (workload) mandatory. Parallelization only makes sense if the gain in speed through parallel execution exceeds the additional management effort!

Here, the term “granularity” refers to the number of individual low -level instructions that a piece of code requires to calculate its result. Apparently, the granularity increases with the size or complexity of a program part, because more low-level instructions are executed at the end. So, in order to make efficient use of parallelism, it is not enough to consider individual instructions of a program. Rather, a compiler must merge larger program parts (‘grains’) and distribute them to the available computing resources . One of the most important challenges of a parallelizing compiler is to find the optimal size of these “chunks” – and thus the optimal granularity.

This brings us to the third reason: complexity. In general, programs can become arbitrarily complex. Compilers have the task of translating programs into another, useful form. But how does one translate information that can be arbitrarily complex? Traditional compilers achieve this goal by limiting their consideration to individual instructions in the program. They know the (manageable) set of possible translations for each of these instructions. The finished program is then little more than the chain of such individual translations. Nevertheless, traditional compilers are already considered to be extremely complex software!

Unfortunately, this “complexity reduction” trick is in direct contradiction to the necessary, minimal granularity described above. A parallelizing compiler must face the challenge of finding an optimal translation even for program chunks that consist of multiple or many instructions. As always, low hanging fruits exist (see e.g.: tensorflow). However, an optimal solution must not restrict itself to individual, specific instructions just because their translation is particularly simple and therefore easy to implement!

And then there is a fourth aspect that also plays into the area of complexity. In addition to the optimal chunk size (granularity), it is also important to identify those grains of a program that can be executed independently of one another. The program analysis required must also take into account the best possible granularity. A compiler must therefore be able to understand large program sections, form chunks of an optimal grain size and execute them efficiently in parallel on heterogeneous hardware.

Ultimately, it is the complexity of this task that has so far prevented such a compiler from being born. In general, development departments still follow the manual approach described earlier. It requires expert knowledge and enormous effort to manually perform the necessary steps (granularity and independence analysis, parallel implementation) for a special program . But despite the enormous amount of time, despite the enormous maintenance issues associated, this approach allows to scratch the surface only! It can neither address nor solve the real problem.

Are we lost?

Not yet! Let’s wrap it up:

- Traditional compilers work at too low a level of granularity. They consider too few instructions to cover a workload, suitable for efficient parallelization.

- Previous attempts to include large/all program parts in an analysis have only been successful in a few special cases. Global analysis for general programs is an unsolved problem and, in the opinion of the author, will remain so for a long time to come.

But now we come to the topic of our headline! For a not too small class of programs ILNumerics succeeded in developing a compiler that fulfills all optimality requirements. It is the class of numeric, array-based programs. They are written by scientists, mathematicians, and engineers to encode the innovations of our modern times. They form the core of big data, machine learning and artificial intelligence, and all codes, making use of them. Numerical algorithms realize innovations – moreover: they are these innovations! They allow to formulate great complexity without great effort. They handle huge data and, therefore, require greatest speed.

A development department, faced with the challenge of accelerating its numerical array codes can only select and use the best possible language and libraries there are. Technical requirements often rule out prototyping languages, like numpy and Matlab. Individual parts may be outsourced to GPUs. Other parts are parallelized using multithreading. All of this slows down the industrial development process significantly. It is like writing GUI programs in assembler…

A Better Approach to faster Array Codes

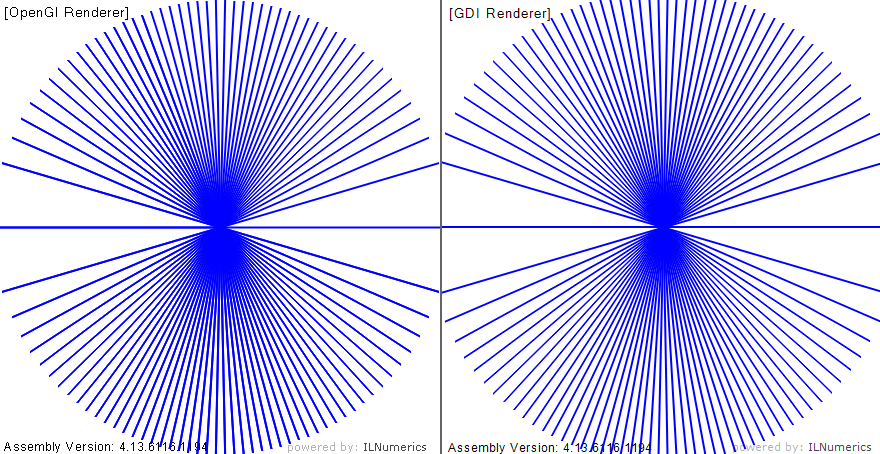

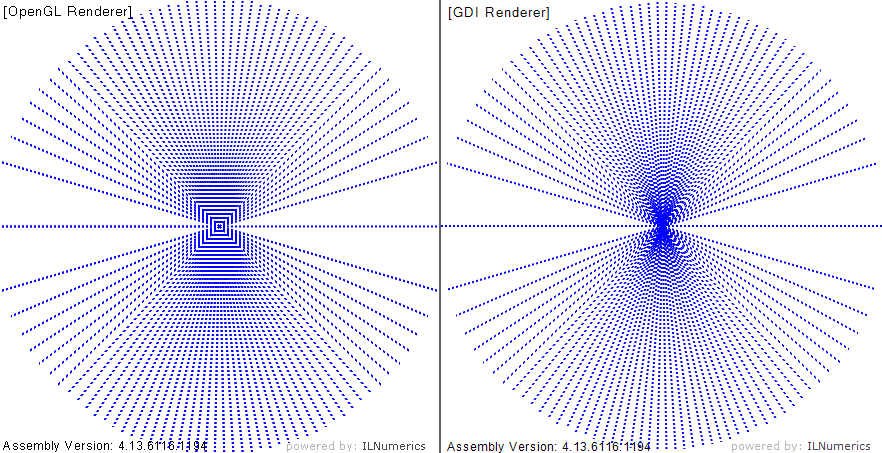

ILNumerics proposes a new, faster approach to the execution of array codes. ILNumerics Accelerator, automatically finds parallel potential in array-based algorithms (as: numpy, Matlab, ILNumerics) and efficiently distributes its workload to heterogeneous computing resources – dynamically and at runtime, when all important information is available. It again scales your algorithm speed to any heterogeneous hardware without recompilation.

The online documentation describes technical details of the ILNumerics Accelerator Compiler. Make sure to register for our newsletter here and don’t miss any news!

Where can I get it ?

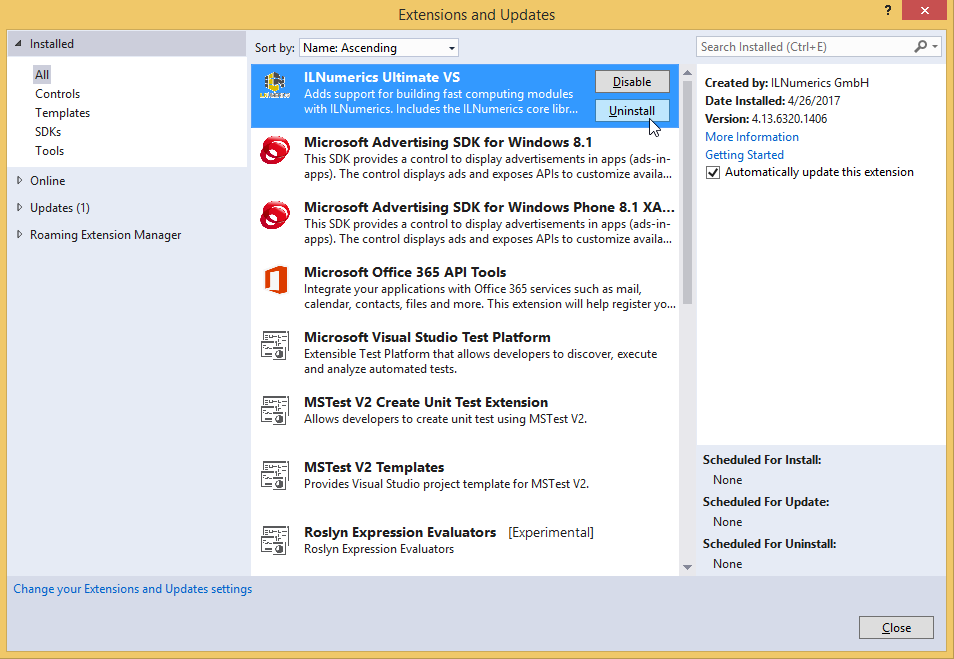

ILNumerics Accelerator has entered the public beta phase. It will be released with ILNumerics Ultimate VS version 7. The pre-release is available on nuget. Start here ! General documentation on the new Accelerator is found here. It will subsequently be completed within the next weeks. Read the getting started guide(s), get your hands dirty and please, let us know what you think!

Patents

ILNumerics software is protected by international patents and patent applications.

DE102011119404.9, WO2018197695A1, US11144348B2, CN110383247A, JP2020518881A, EP3443458A1, EP22156804, US20230259338A1, JP7495028B2, CN116610436A, EP23173406.2, PCT/EP2024/062463

. ILNumerics is a registered trademark of ILNumerics GmbH, Berlin, Germany.